This is the 100th Etext file presented by Project Gutenberg, and is presented. SERVICE THAT CHARGES FOR DOWNLOAD TIME OR FOR MEMBERSHIP. Will have to do four text files per month: thus upping our productivity from one million. But ah, thought kills me that I am not thought To leap large lengths of miles.

In some situations, a generic file may need to be created for testing purposes. The command below can create a file of the size specified. For example, to test a file system or backup software's ability to detect capacity or files, use this command to create an MP3 file of exactly 100MB.

Create a File of a Specific Size

Create a File of a Specific Size- Open a Command Prompt.

- Type the following command:

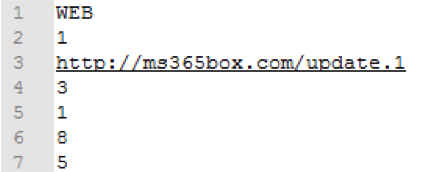

fsutil file createnewIn its current form, this application offers a few advantages over common

shuf-based approaches:Sample Large Text File Downloads

- On small k, it performs roughly 2.25-2.75x faster than

shufin informal tests on OS X and Linux hosts. - It uses much less memory than the usual reservoir sampling approach that stores a pool of sampled elements; instead,

samplestores the start positions of sampled lines (8 bytes per line). - Using less memory gives

samplean advantage overshuffor whole-genome scale files, helping avoidshuf: memory exhaustederrors. For instance, a 2 GB allocation would allow a sample size up to ~268M random elements (sampling without replacement).

The

sampletool stores a pool of line positions and makes two passes through the input file. One pass generates the sample of random positions, using a Mersenne Twister to generate uniformly random values, while the second pass uses those positions to print the sample to standard output. To minimize the expense of this second pass, we usemmaproutines to gain random access to data in the (regular) input file on both passes.The benefit that

mmapprovided was significant. For comparison purposes, we also add a--cstdiooption to test the performance of the use of standard C I/O routines (fseek(), etc.); predictably, this performed worse than themmap-based approach in all tests, but timing results were about identical withgshufon OS X and still an average 1.5x improvement overshufunder Linux.The

sampletool can be used to sample from any text file delimited by newline characters (BED, SAM, VCF, etc.).By adding the

--preserve-orderoption, the output sample preserves the input order. For example, when sampling from an input BED file that has been sorted by BEDOPSsort-bed— which applies a lexicographical sort on chromosome names and a numerical sort on start and stop coordinates — the sample will also have the same ordering applied, with a relatively small O(k logk) penalty for a sample of size k.By omitting the sample size parameter, the

sampletool can shuffle the entire file. This tool can be used to shuffle files thatshufhas trouble with; however, it currently operates slower thanshuf, whereshufcan be used. We recommend use ofshuffor shuffling an entire file, or specifying the sample size (up to the line count, if known ahead of time), when possible.Large Text File Reader Free

One downside at this time is that

sampledoes not process a standard input stream; the input must be a regular file. - On small k, it performs roughly 2.25-2.75x faster than